Contents:

The learning rate determines how much weight and bias will be changed after every iteration so that the loss will be minimized, and we have set it to 0.1. It is during this activation period that the weighted inputs are transformed into the output of the system. As such, the choice and performance of the activation function have a large impact on the capabilities of the ANN. Built this patch after reading a number of blog posts on neural networks. Andrej Karpathy’s post « A Hacker’s Guide to Neural Networks » was particularly insightful.

We also set the number of iterations and the learning rate for the gradient descent method. As we can see, the Perceptron predicted the correct output for logical OR. Similarly, we can train our Perceptron to predict for AND and XOR operators. But there is a catch while the Perceptron learns the correct mapping for AND and OR. It fails to map the output for XOR because the data points are in a non-linear arrangement, and hence we need a model which can learn these complexities. Adding a hidden layer will help the Perceptron to learn that non-linearity.

- We ascribe the superior behavior of these genotypes to the very compact genetic representation, paired with a good adaptation policy.

- Natural language has several elements that interact with each other and, therefore, it can be described by a set of variables referring to each of its basic elements, which makes its study challenging.

- In our case, since we are just memorizing these values , we don’t have that luxury.

- We can see it was kind of luck the firsts iterations and accurate for half of the outputs, but after the second it only provides a correct result of one-quarter of the iterations.

https://forexhero.info/ layers are those layers with nodes other than the input and output nodes. In any iteration — whether testing or training — these nodes are passed the input from our data. Let the outer layer weights be wo while the hidden layer weights be wh. We’ll initialize our weights and expected outputs as per the truth table of XOR. We will use mean absolute error to implement a single-layer perceptron.

The theoretical neural network is given below in the pic.I want to replicate the same using matlab neural net toolbox. Hello I am new to neural networrks in matlab.And I want to create a feedforward neural net to calculate XORs of two input values. Let’s train our MLP with a learning rate of 0.2 over 5000 epochs. Adding input nodes — Image by Author using draw.ioFinally, we need an AND gate, which we’ll train just we have been. We know that a datapoint’s evaluation is expressed by the relation wX + b . The perceptron basically works as a threshold function — non-negative outputs are put into one class while negative ones are put into the other class.

Quantum Error-Correcting Codes

When I tried the same code on a Windows machine, the network failed to converge several times but on a Mac it always converged. So, if it does not converge for you the first time, try a few times and see if it works . Input is fed to the neural network in the form of a matrix. So we have to define the input and output matrix’s dimension (a.k.a. shape). X’s shape will be because one input set has two values, and the shape of Y will be . To explain the XOR relationship between axiom and logic in the linguistic chain, we bet on the fractal feature of natural language in the production of value/meaning.

Its differentiable, so it allows us to comfortably perform backpropagation to improve our model. Backpropagation is a way to update the weights and biases of a model starting from the output layer all the way to the beginning. The main principle behind it is that each parameter changes in proportion to how much it affects the network’s output. A weight that has barely any effect on the output of the model will show a very small change, while one that has a large negative impact will change drastically to improve the model’s prediction power.

The bias is analogous to a weight independent of any input node. Basically, it makes the model more flexible, since you can “move” the activation function around. Neural networks are complex to code compared to machine learning models. If we compile the whole code of a single-layer perceptron, it will exceed 100 lines.

The designing process will remain the same with one change. We will choose one extra hidden layer apart from the input and output layers. We will place the hidden layer in between these two layers. For that, we also need to define the activation and loss function for them and update the parameters using the gradient descent optimization algorithm. This blog is intended to familiarize you with the crux of neural networks and show how neurons work. The choice of parameters like the number of layers, neurons per layer, activation function, loss function, optimization algorithm, and epochs can be a game changer.

Plotting output of the model that failed to learn, given a set of hyper-parameters:

1.Computing the output vector given the inputs and a random selection of weights in a “forward” computational flow. When the pattern counter value reaches p, the obfuscation key is updated. As sometimes the set of test patterns cannot be delivered at once, this feature offers the IP owner flexibility to dynamically add new patterns with updated obfuscation key.

I recommend you to play with the parameters to see how many iterations it needs to achieve the 100% accuracy rate. There are many combinations of the parameters settings so is really up to your experience and the classic examples you can find in “must read” books. Hence, it signifies that the Artificial Neural Network for the XOR logic gate is correctly implemented. Its derivate its also implemented through the _delsigmoid function. A potential decision boundary that fits our example — Image by Author using draw.ioWe need to look for a more general model, which would allow for non-linear decision boundaries, like a curve, as is the case above.

Part 05 — Neural net XOR gate solver using Tensorflow and Keras

This function allows us to fit the output in a way that makes more sense. For example, in the case of a simple classifier, an output of say -2.5 or 8 doesn’t make much sense with regards to classification. If we use something called a sigmoidal activation function, we can fit that within a range of 0 to 1, which can be interpreted directly as a probability of a datapoint belonging to a particular class.

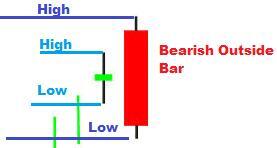

It was used here to make it easier to understand how a perceptron works, but for classification tasks, there are better alternatives, like binary cross-entropy loss. Adding more layers or nodes gives increasingly complex decision boundaries. But this could also lead to something called overfitting — where a model achieves very high accuracies on the training data, but fails to generalize. The Loss Plot over 5000 epochs of our MLP — Image by AuthorA clear non-linear decision boundary is created here with our generalized neural network, or MLP.

1. Neural Networks¶

In W1, the values of weight 1 to weight 9 (in Fig 6.) are defined and stored. That way, these matrixes can be used in both the forward pass and backward pass calculations. To find solution for XOR problem we are considering two widely used software that is being relied on by many software developers for their work. The first software is Google Colab tool which can be used to implement ANN programs by coding the neural network using python.

The learning algorithm is a principled way of changing the weights and biases based on the loss function. An artificial neural network is made of layers, and a layer is made of many perceptrons . Perceptron is the basic computational unit of the neural network, which multiplies input with weight, adds bias, and passes the result from the activation function to deliver the output to the next layer. Following this, two functions were created using the previously explained equations for the forward pass and backward pass. In the forward pass function , the input data is multiplied by the weights before the sigmoid activation function is applied.

Whereas, to separate data points of XOR, we need two linear lines or can add a new dimension and then separate them using a plane. In the later part of this blog, we will see how SLP fails in learning XOR properties and will implement MLP for it. A linear line can easily separate data points of OR and AND.

Drifting assemblies for persistent memory: Neuron transitions and … – pnas.org

Drifting assemblies for persistent memory: Neuron transitions and ….

Posted: Mon, 28 Feb 2022 23:36:29 GMT [source]

The NumPy library is mainly used for matrix calculations while the MatPlotLib library is used for data visualization at the end. When the inputs are replaced with X1 and X2, Table 1 can be used to represent the XOR gate. # This is expensive because it uses the whole dataset, so we don’t want to do it too often. Here we define the loss type we’ll use, the weight optimizer for the neuron’s connections, and the metrics we need.

By defining a xor neural network, activation function, and threshold for each neuron, neurons in the network act independently and output data when activated, sending the signal over to the next layer of the ANN . The weights are used to define how important each variable is; the larger the weight of the node, the larger the impact a node has on the overall output of the network . Then you can model this problem as a neural network, a model that will learn and will calibrate itself to provide accurate solutions. As discussed, it’s applied to the output of each hidden layer node and the output node.

AI Boosted by Parallel Convolutional Light-Based Processors – SciTechDaily

AI Boosted by Parallel Convolutional Light-Based Processors.

Posted: Thu, 07 Jan 2021 08:00:00 GMT [source]

# Matrix multiplication of the layer 2 delta with the transpose of the first synapse function. The sigmoid funtion feeds forward the data by converting the numeric matrices to probablities. The derivative of x is required when calculating error or performing back-propagation. We have two binary entries and the output will be 1 only when just one of the entries is 1 and the other is 0. It means that from the four possible combinations only two will have 1 as output.

After which, the bias is added, and the activation function is applied to the entire equation. This is the basis for the forward propagation of a neural network. Here, the model predicted output for each of the test inputs are exactly matched with the XOR logic gate conventional output () according to the truth table and the cost function is also continuously converging. We’ll be using the sigmoid function in each of our hidden layer nodes and of course, our output node. Squared Error LossSince, there may be many weights contributing to this error, we take the partial derivative, to find the minimum error, with respect to each weight at a time.

This explains why the complexity of language is based on some hidden logical-mathematical rules to guide research practice. Both in the creation of the sequence of symbols and in the subsequent interpretation of the symbols, there is a hidden layer of assembly that is characterized by the fractal nature. The paper aims to give general performance on achievements and trends in techniques for training of spiking neural networks in context of its hardware implementation, i.e. of neuromorphic technology. Marvin Minsky and Samuel Papert in their book ‘Perceptrons’ showed that the XOR gate cannot be solved using a two layer perceptron, since the solution for a XOR gate was not linearly separable. This conclusion lead to a significantly reduced interest in Frank Rosenblatt’s perceptrons as a mechanism for building artificial intelligence applications.

The learning rate affects the size of each step when the weight is updated. If the learning rate is too small, the convergence takes much longer, and the loss function could get stuck in a local minimum instead of seeking the global minimum . As mentioned, the simple Perceptron network has two distinct steps during the processing of inputs. Basically, in this calculation, the dot product of the input and weight vector is calculated.